*This work is done during internship at Microsoft AI+Research, Redmond, USA.

The goal is for machines to read and understand an arbitrarily-given context (such as an article from the Wikipedia) and answers questions about the given context. In order to achieve this, we need to give machine the ability to think about the question when reading the context. This is performed through attention, attending to relevant part in the question as we read through the context. A key concept in this work is that when humasn focus our attention, we take into account different levesl of meaning. Sometimes we look for the exact details, did this event happen in 2016 or 2017? Sometimes we think more abstractly and treat 2016, 2017, -37, 3.14159 all as just numbers.

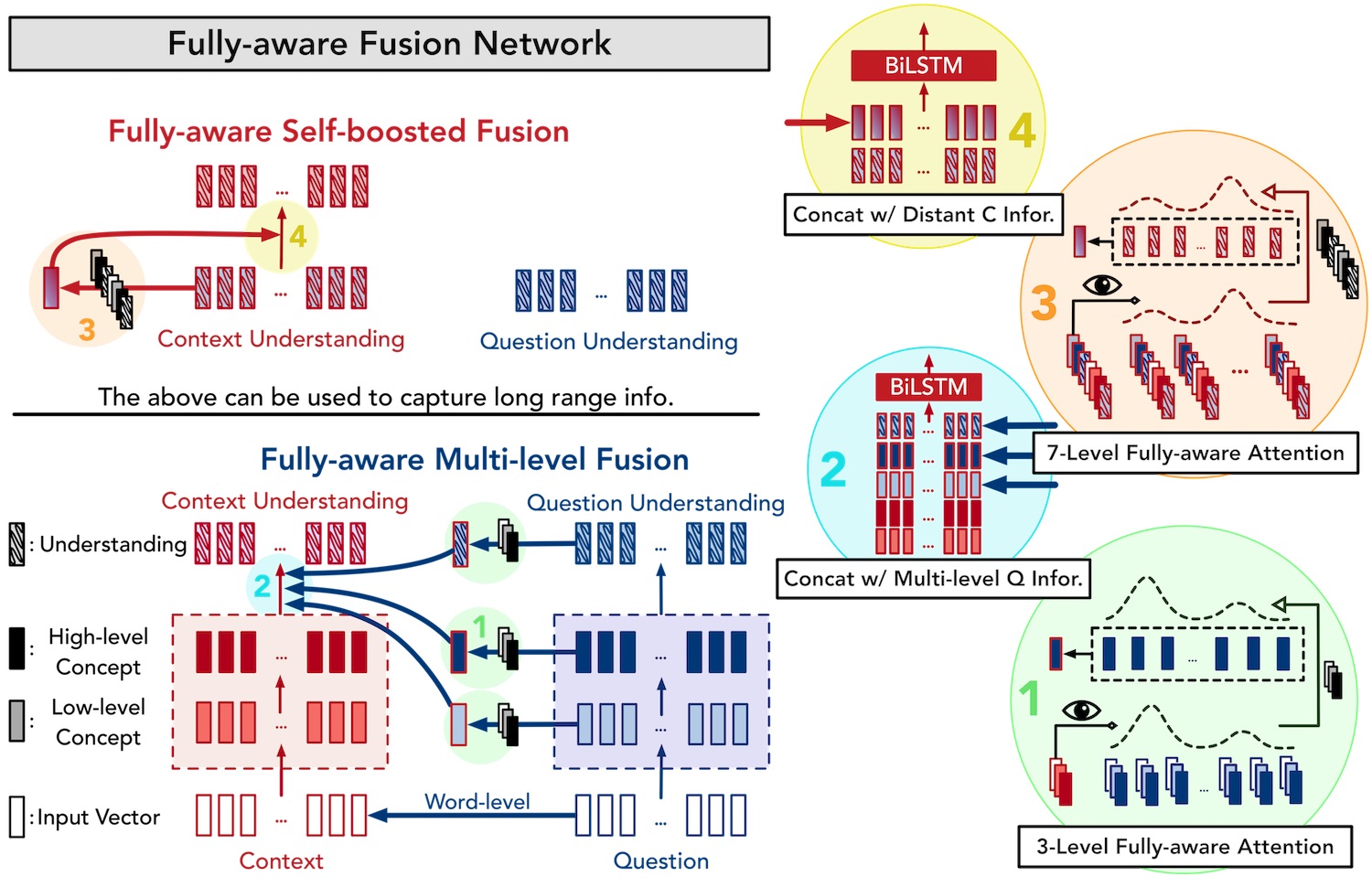

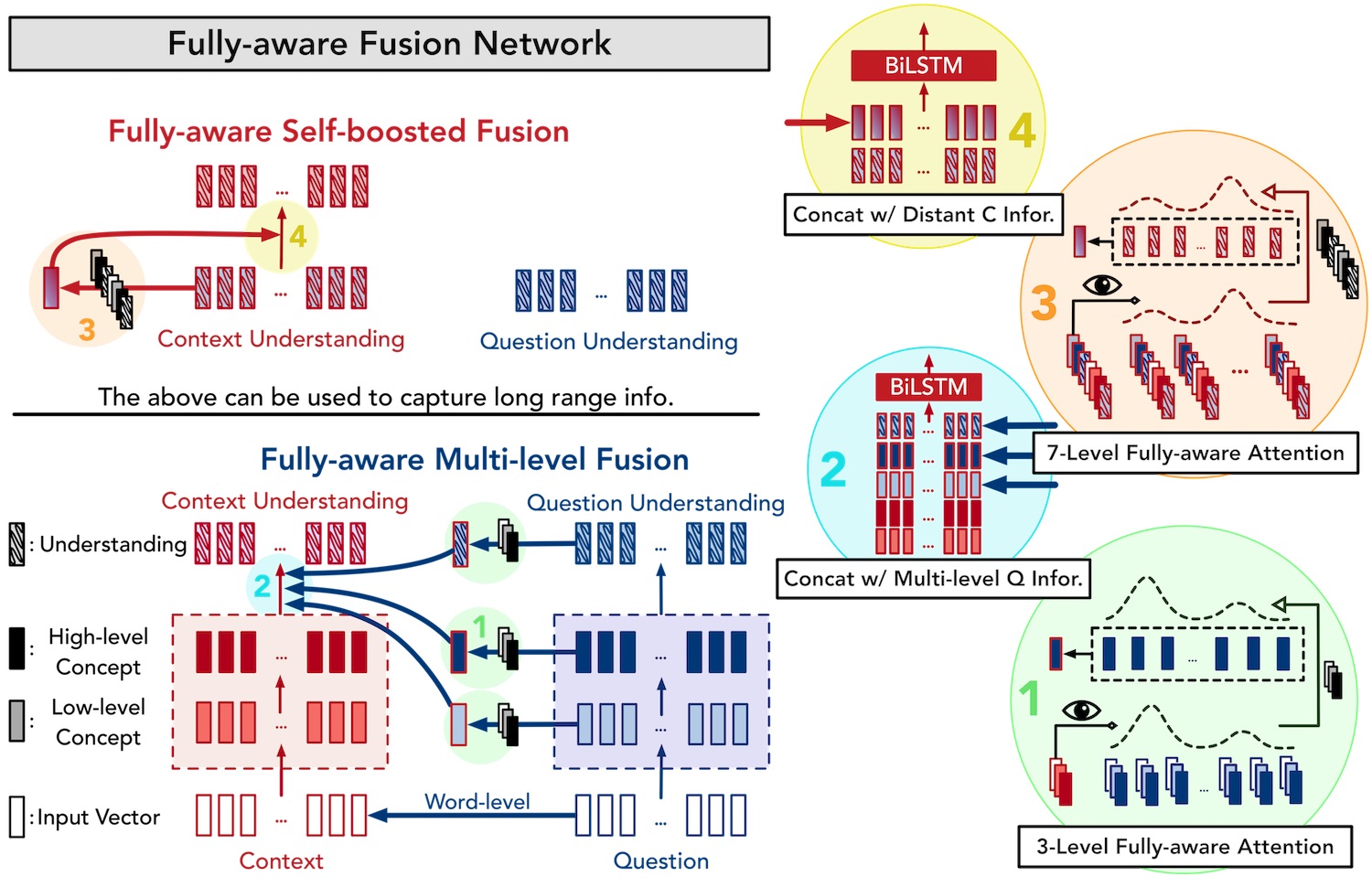

Motivated from this concept, we propose a light-weight enhancement for attention, fully-aware attention, and an end-to-end neural architecture, FusionNet, as illustrated above. The performance improved significantly by replacing standard attention with fully-aware attention. At the end of my internship (Sep 20th, 2017), we achieved a new state-of-the-art on the Stanford Question Answering Dataset (SQuAD). Furthermore, we improved the previous best-reported number by +5% on an adversarial dataset for machine comprehension. Furthermore, it also shows decent improvement when applied on natural language inference task.

Publication:

H.-Y. Huang, C. Zhu, Y. Shen, W. Chen. FusionNet: Fusing via Fully-aware Attention with Application to Machine Comprehension. Sixth International Conference on Learning Representations (ICLR 2018).