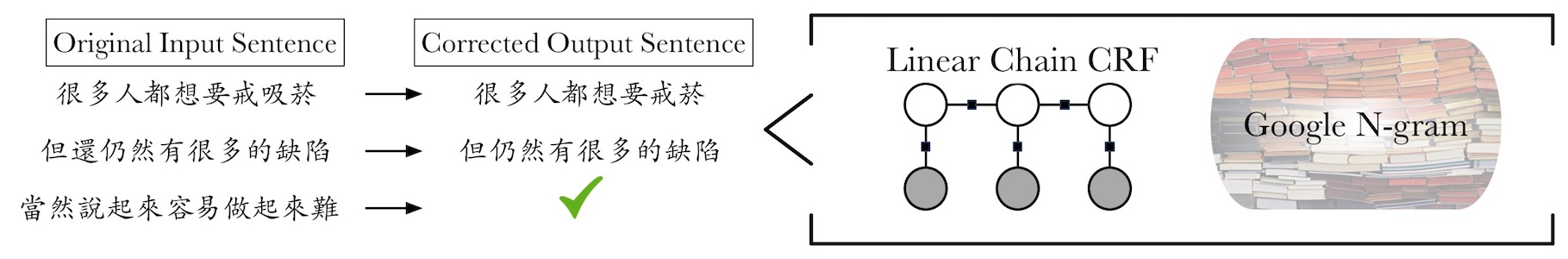

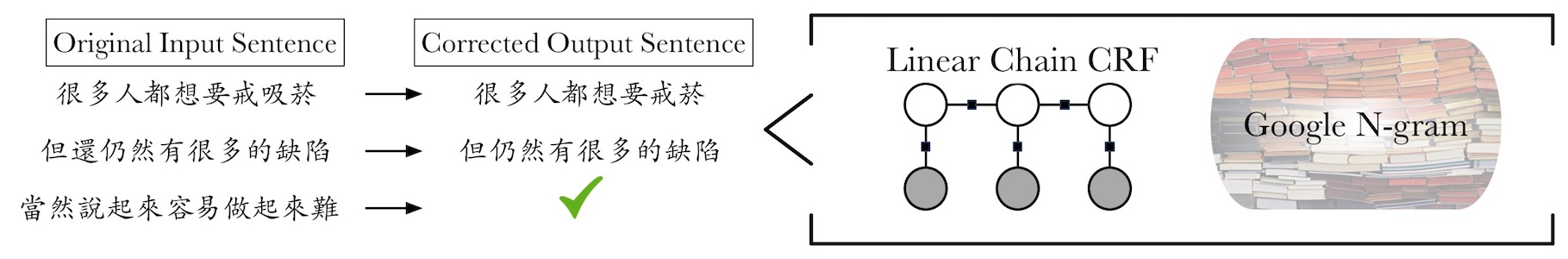

In this project, our goal is to identify and correct redundant words that appear in a Chinese sentence. This problem requires both identification and correction. For identification, we combine rule-based and language model based approaches. First, we used hypothesis test to find erroneous trigrams (consists of both POS and word) that collocate with redundancy. Then we extract probability information from the language model used in Stanford POS tagger. The two informations are combined through training a logistic regression model with features from both methods. For correction, we have tried two different strategy. One is to train a conditional random field that can predict the label of each word segment (being redundant or not) in a sentence. Another attempt is to train a language model through accessing the raw data of Google N-gram. Then we remove the word segment that can substantially boost the sentence's language model probability. Note that working with Google N-gram takes great effort, since the raw data of Google N-gram already takes several terabytes to store.